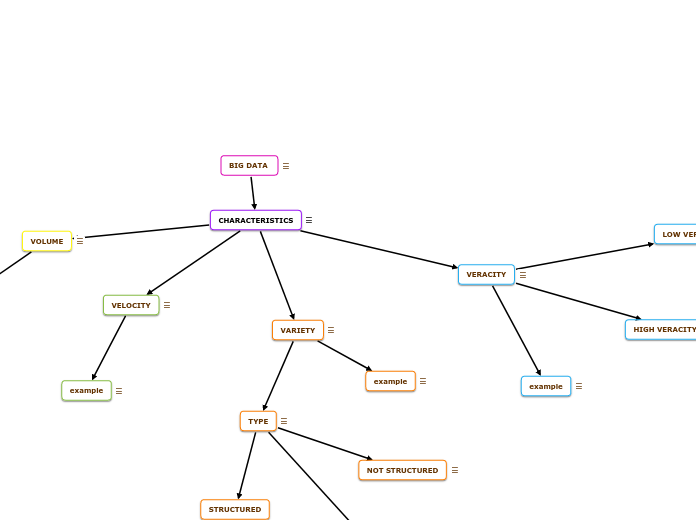

BIG DATA

Data sets or combinations of data sets whose size (volume), complexity (variability), and rate of growth (velocity) make it difficult to capture, manage, process, or analyze using conventional technologies and tools.

CHARACTERISTICS

They are called the four v's

VOLUME

The size of the data sets that must be analyzed and processed, which now often exceed terabytes and petabytes. It requires distinct processing technologies and dissimilar to traditional storage and processing capabilities.

example

The set would be all credit card transactions in one day within Europe.

VERACITY

the quality of the data being analysed.

LOW VERACITY DATA

High truth data has many records that are valuable to analyze and that contribute significantly to the overall results.

HIGH VERACITY DATA

Low veracity data contains a high percentage of nonsense data. What has no value in these data sets is called noise.

example

Data from a medical experiment or trial.

VARIETY

Variety makes Big Data really big.

example

Large variety data sets would be CCTV video and audio files that are generated at various locations in a city.

TYPE

The variety of data types often requires specialized algorithms and processing capabilities.

STRUCTURED

NOT STRUCTURED

documents, videos, audios, etc..

SEMI-STRUCTURED

software, spreadsheets, reports.

VELOCITY

the speed with which the data is generated. High-speed data is generated at such a rate that it requires different (distributed) processing techniques.

example

data that is generated with high speed would be Twitter messages or Facebook posts.