Script

Challenges

Where i fit in

Passionate

Strenghts

Engagement

Why SynergySol

strategic location

AI Potential

Experience

I’ve also been an advisor in KaggleX for about

four years, guiding smart mentees on projects like GenAI agents and llm-based recommendation

systems using GCP.

and several healthcare-focused ML and GenAI projects at Keylead

AI-driven marketing for Cinere

I’ve led major projects in banking (Saman, Mellat)

Introducing

with over 10 years of hands-on experience in applied machine learning and generative AI.

I have degrees in Math, Computer Science, and AI

Hi Synergy Sol Trading Team!

Thank you for this opportunity

Hugging Face

APIs

Model Clases

Trainers

...

SFT

DPO

Transformers

(Main)

. . .

HQQ

AWQ

GPTQ

GGUF

Training

Distributed Traing

Parallelism methods

Distributed CPUs

DeepSpeed

Accelrate

Training API

Optimizerz

Fine-tuning

Trainer

Inference

Agents

LLMs

Prompt engineering

Optimizing inference

Caching

Serving

Pipeline API

Pipeline

Base Classes

Preprocessors

Feature extractors

Tokenizers

Models

Loading models

Text Representation

NN BASED EMBEDDINGS

Continius Space

Fine-tuning Based

embeddings

Language-Specific Embeddings

Multi Modal Embeddings

Domain-Specific Embeddings

SentiBERT

PatentBERT

ClinicalBERT

QA.SciBERT

BioBERT

Knowledge-Enriched Embeddings

Cross-Lingual Embeddings

Feature Based

Embedding

Dynamic

Embeddings

UNIfied pre-trained

Language Model (UNILM)

BERT

GPT

ELMo

ULMFiT

CoVe

C2V

Static

Embeddings

FastText

Glove

Word2vec

SkipGRAM

CBOW

Statistical Encoding

Conceptual Embedding

Explicit Semantic

Analysis (ESA)

Dimentionally

Reduction

Feature Transformation

ICA

LDA

Feature Selection

Discrete Space

TF-IDF

Term Frequency

Bag of Words

CountVectorizer

Phase III: Opt Inference & Serving

Projects

Prompting and Gaurdrails

Phase IV: Chatbots and AI Agents

Practice

Anjanava Book

AI Agents in Action Book

Liu et al Paper

Phase I: LLMOps

RYAN DOAN Book: Essential Guide to LLMOps

Chip Huyen Book: AI Engineering

Phase II:

RAG Fundamentals

Projects And Articles

Research Assistant

Andrei Gheorghiu Book

Ben Auffarth Book

Theory

Phase I:

LLMs Fundamentals

Farameworks

Deepspeed

Axolotl

Project & Articles

Fine-Tuning On Medicare Data

Llama 3.2

Deep Seek

Gemma 3

The Ultimate Guide to Fine-Tuning Paper

To article with Code

Hugging Face Cources

Working With HF

TRL

Transformers

LLM Course

Jay Alammar

Part III

Part II: Chapter 4 (text Classification) ,

6 (prompt Engineering)

Part I

KaggleX, GenAI Agents, LLM-Based recommendation

Business Sectors

Technology and Software Development

Manufacturing

Knowledge Management

Supply Chain Management

Human Resources and Talent Management

Research and Development

Healthcare

Education and Training

Regulatory Compliance

Customer Relationship Management (CRM)

Sales and Marketing

Finance and Banking

e-Business

Basic LLMs Tasks

Content Generation and Correction

Information Extraction

Text-to-Text Transformation

Semantic Search

Sentiment Analysis

Content Personalization

Ethical and Bias Evaluation

Paraphrasing

Language Translation

Text Summarization

Conversational AI

Question Answering

Banking

AI_Driven Marketing

Most research on word embedding implementations usually

focuses on general-domain text generation. However, as the

authors in [108] demonstrate, such general-domain applica-

tions do not work optimally when used in the domain-specific

analysis of very large corpora, for example, in the biomedical

domain

Feature-based techniques can either generate static or

dynamic embeddings. Static embeddings are non-contextual,

as the embeddings remain the same or are static regardless

of the context. To learn such word embeddings, shallow

networks are used. Whereas, in dynamic embeddings, the

embeddings of the same word changes based on the context,

hence addressing the polysemy aspect of the words

healthcare-focused

In statistical methods, words are represented using vectors

of numbers, and the corpus is represented as a collection

of such vectors, forming a matrix. Such statistical methods

reduce documents of arbitrary length to fixed-length lists of

numbers. These vector representations were helpful since

they enabled researchers to use linear algebra operations

to manipulate the vectors and compute distances and sim-

ilarities.

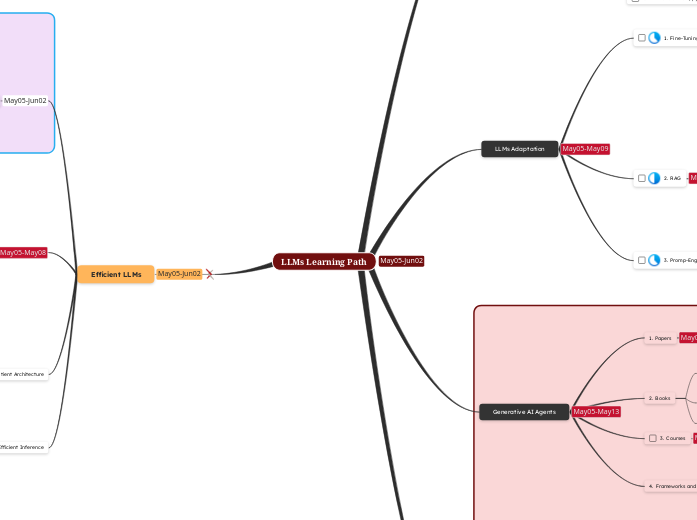

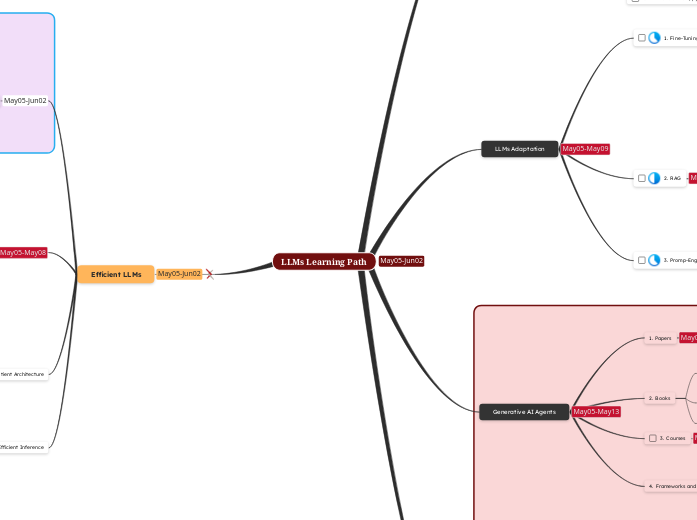

LLMs Learning Path

Efficient LLMs

Efficient Inference

System-Level Inference

Efficiency Optimization

vLLM ,

DeepSpeed-Inference

Algorithm-Level Inference

Efficiency Optimization

Effitient Architecture

Long Context LLMs

MOE

Efficient Attention

Hardware-Assisted Attention

FlashAttention, vAttention

Learnable Pattern Strategies

HyperAttention

Fixed Pattern Strategies

Sparse Transformer , Longformer , Lightning Attention-2

Model Compression

Knowledge Distillation

Low-Rank Approximation

Parameter Pruning

Quantization

Quantization-Aware Training

Post-Training Quantization

Weight-Activation Co-Quantization

RPTQ, QLLM

Weight-Only Quantization

GPTQ, AWQ, SpQR

Efficient Fine-Tuning

Frameworks

Unsloth

MEFT (Q-LoRA, QA-LoRA, ...)

PEFT

Prompt Tuning

Prefix Tuning

Adapter-based Tuning

Low-Rank Adaptation (LoRA, DoRA)

AI Apps

Frameworks

Local

Gradio

Jan

Deployment

Anyscale

Hugging Face Inference Endpoints.

Serving

vLLM

BentoML

Integration

LlamaIndex

Scaled Multi-Agents

Local AI Agents

Local Chatbots

LLM-Based Recommendation Chatbot

Medical QA ChatBot

Research Assistant(Talking Papers)

Generative AI Agents

Frameworks and Practices

RASA

Langchain / LangGraph

CrewAI

SmolAgents

Courses

HF Agent Cource

Books

A DVANCES AND C HALLENGES IN F OUNDATION AGENTS, Liu

AI Agents in Action, MICHEAL LANHAM

https://arxiv.org/pdf/2401.03428

https://www.arxiv.org/pdf/2504.01990

LLMs Adaptation

Output ConfiguRATION

Gaurdrails

Prompt Eng

RAG

20 Types of RAG Arch

Langchain

Indexes

weaviate

Faiss

Pinecone

Qdrant

Chroma

Prompt

Agent

Chains

Memory

Fine-Tuning ( Transfer learning, Strategies )

- Instruction-tuning

- Alignment-tuning

- Transfer Learning

Fundamentals

HF Transformers, pyTorch

HF Course:

https://huggingface.co/docs/transformers/en/quicktour

Language Modeling and llms

https://arxiv.org/pdf/2302.08575

ChatgptDiscussion:

https://chatgpt.com/share/6819003f-e674-8005-af8b-11a7ef37bd70

https://arxiv.org/pdf/2303.18223

https://arxiv.org/pdf/2402.06853

https://arxiv.org/pdf/2303.05759

https://wandb.ai/madhana/Language-Models/reports/Language-Modeling-A-Beginner-s-Guide---VmlldzozMzk3NjI3

- Full Language Modeling

- Prefix Language Modeling

- Masked Language Modeling

- Unified Language Modeling

From Seq-to-Seq and RNN

to Attention and Transformers

Papers

Lewis- Wolf

Vasilev