przez Rhys Ward 6 lat temu

334

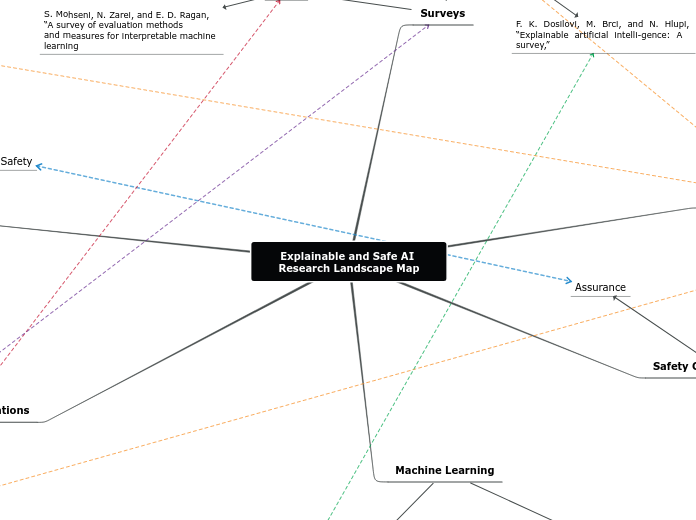

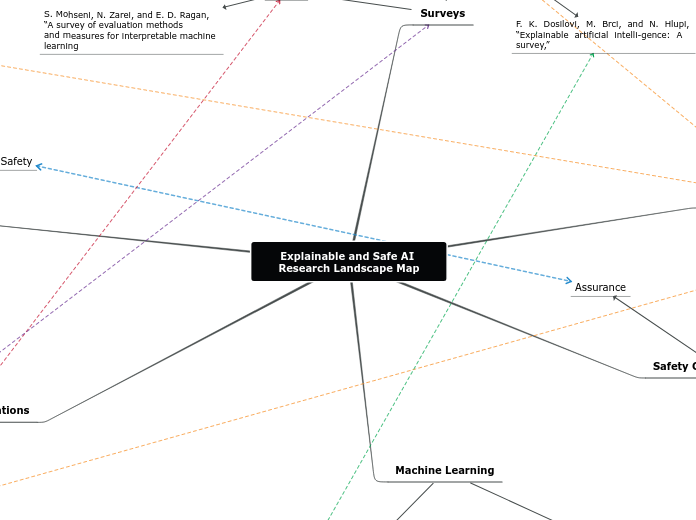

Explainable and Safe AI Research Landscape Map

przez Rhys Ward 6 lat temu

334

Więcej takich

Z. C. Lipton, “The mythos of model interpretability,”

Shane T. Mueller "Explanation in Human-AI Systems: A Literature Meta-Review Synopsis of Key Ideas and Publications and Bibliography for Explainable AI"

A. Avati, K. Jung, S. Harman, L. Downing, A. Ng, and N. H. Shah, “Im-proving palliative care with deep learning,”

L. A. Hendricks, Z. Akata, M. Rohrbach, J. Donahue, B. Schiele,and T. D. and, “Generating visual explanations,”

C. Otte, “Safe and interpretable machine learning a methodologicalreview,”

C. Olah, L. Schubert, and A. Mordvintsev, “Feature visualization how neural networks build up their understanding of images

T. Zahavy, N. B. Zrihem, and S. Mannor, “Graying the black box:Understanding dqns

Saliency maps

T. Zahavy, N. B. Zrihem, and S. Mannor, “Graying the black box:Understanding dqns,”https://arxiv.org/abs/1602.02658, 2016.

M. T. Ribeiro, S. Singh, and C. Guestrin,“why should i trust you? explaining the predictions of any classifier, ” https://arxiv.org/abs/1602.04938, 2016.