The modern-day decision tree has its roots from John Ross Quinlan, who is a computer researcher and programmer. He worked to build out a method of decision-making through a decision tree which is a visual representation. In fact, a decision tree diagram is really just the graphical representation of those mathematical choices. We won’t be going into deep math here. Instead, we will better understand how easy yet powerful a decision tree can be.

What is a decision tree, and what is a leaf node?

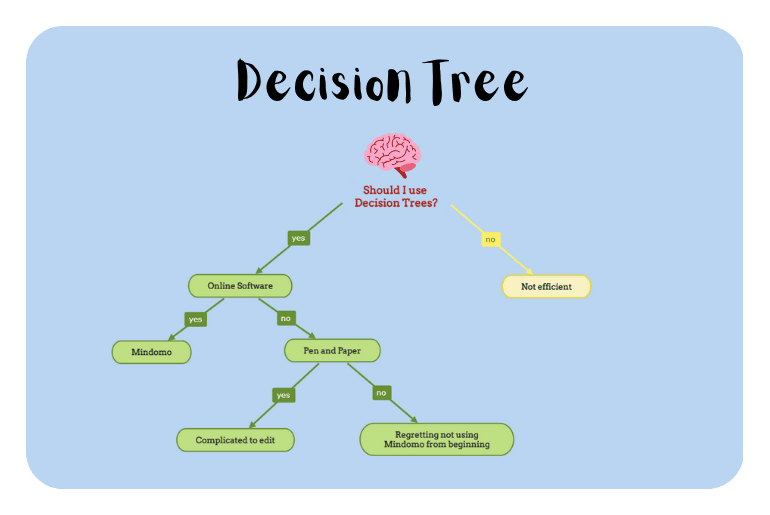

A decision tree can help with the decision-making process, and sometimes it can be broken into multiple trees. Design trees tend to look like flowchart diagrams. Yet the main difference is that decision trees are used as a decision-making tool. A decision tree is easy to understand and even easier to do decision tree analysis. it’s not about getting to an expected value always.

A decision tree is a flowchart-like diagram that shows the various outcomes from a series of decisions. It can be used as a decision-making tool, for research analysis, or for planning strategy. A primary advantage of using a decision tree is that it is easy to follow and understand. Even the terminology, such as leaf node, is there to keep it easy.

Decision trees do start out simple with the first node that represents the initial decision or the question and end up with a particular decision at the end. At times they can accurately predict the efficacy of a situation through its decision process. No matter what, every run through a decision tree will lead to the ultimate objective or final decision.

At times it can also accurately predict certain outcomes and help with statistical learning. It’s the basis of machine learning and, as an example, can help with regression problems. This is, of course, when using extensive amounts of data to determine various outcomes, predictions, and rules.

When using a decision tree diagram, you won’t be using that much hard data. You’ll also be using a single decision tree at a time to reduce complexity.

How do decision trees work?

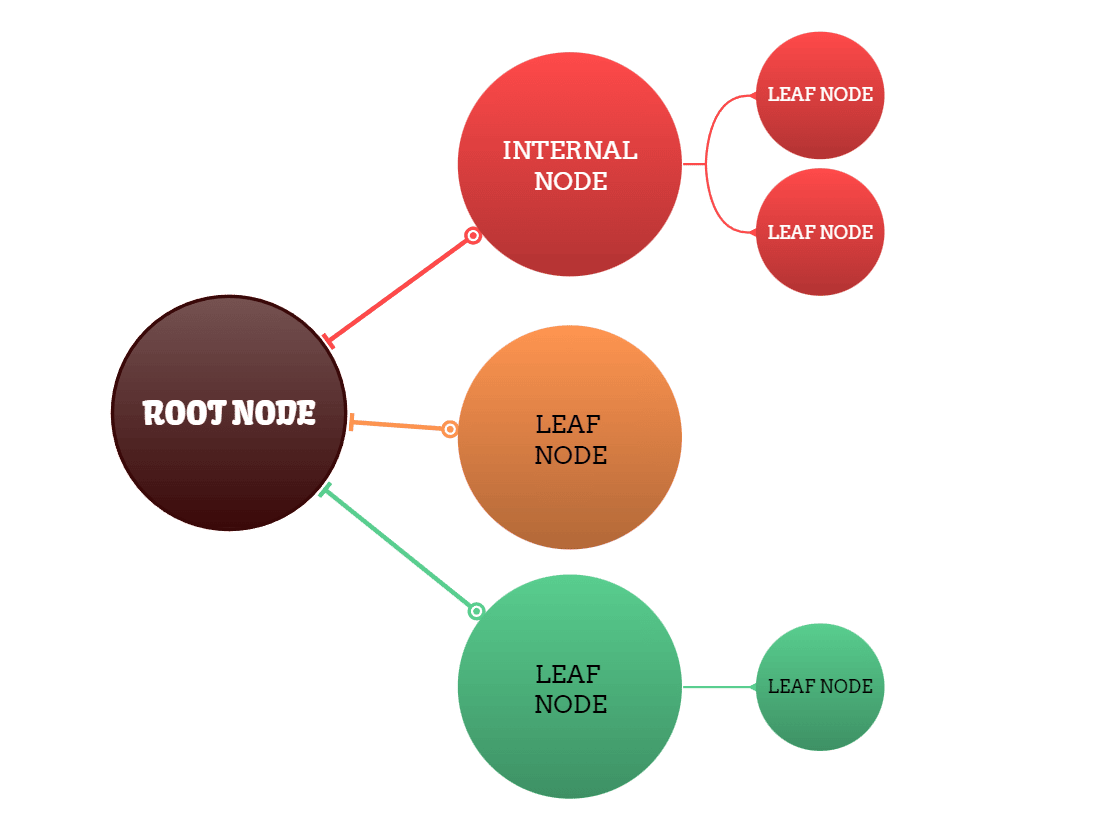

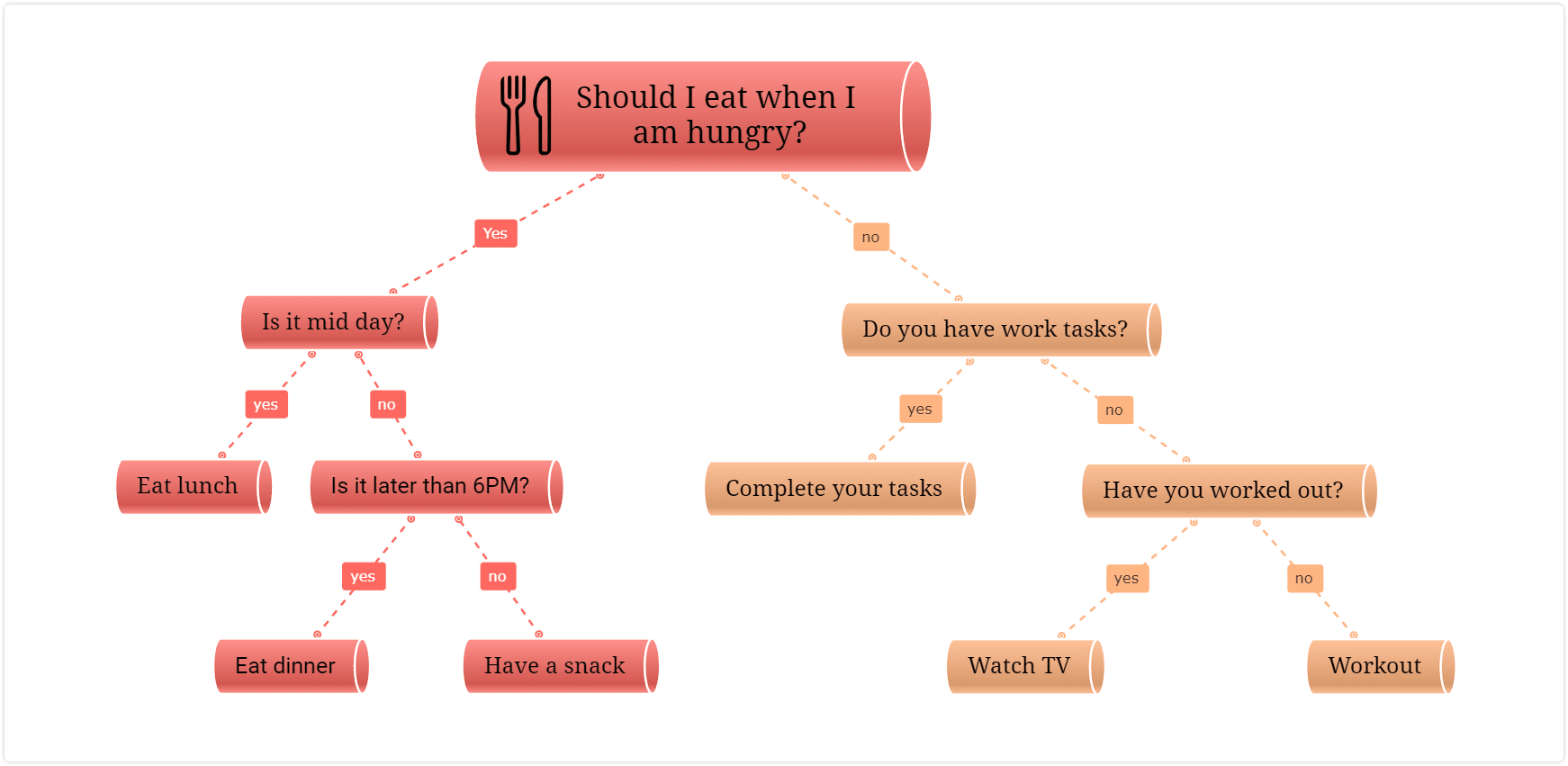

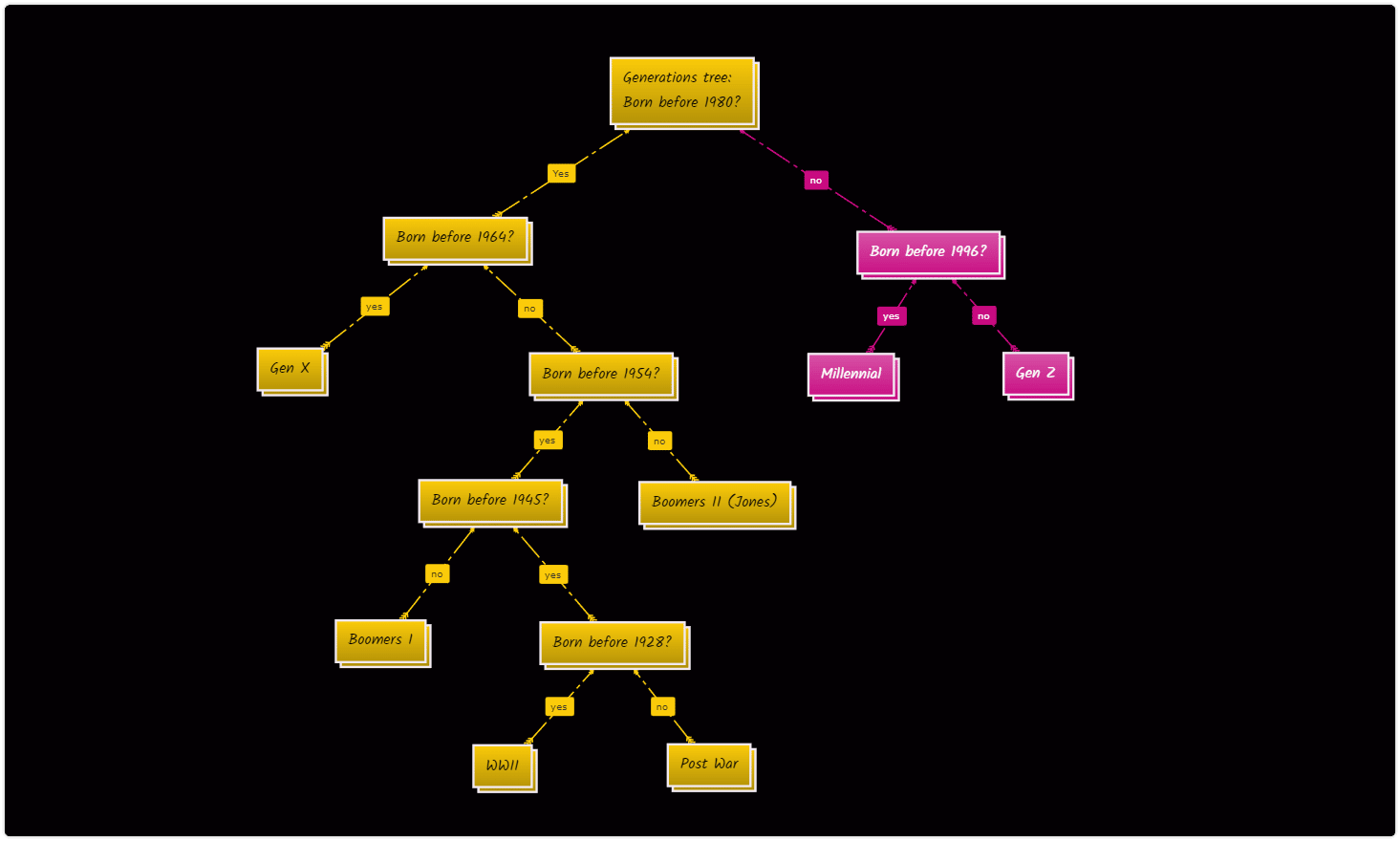

You can either use a decision tree to describe something or to predict an outcome. The tree structure is still constructed in the same way, whichever route you take. The end goal is to show all possible outcomes based on prior decisions made. Each decision tree starts with the first decision to make. This is known as the root node, which looks like a rectangle, and it is always a single node.

The decisions are connected by ‘branches’ that connect to nodes for decisions. The object is to choose one of the decision nodes as an option. This then branches out to a new set of decision nodes. We continue along this path until we hit a node that has no more possible outcomes. These are known as the leaf nodes. As you can assume, a tree structure will have multiple leaf nodes.

What goes into those decision nodes and, ultimately, leaf nodes can vary. Typically it’s something that can be used as numerical and categorical data. That way, you’re able to review the data and begin analyzing the outcomes. When doing multiple decision trees on the same topic, it can be construed as a manual type of data mining.

You also cannot skip the initial decision or any of the decision nodes, and you must go in chronological order to do the decision process correctly. You can do a decision tree multiple times to see the different paths or different outcomes. It can show you all the complex decisions or chance events and help you understand the whole example.

When should decision trees be used?

Since decision trees are mini data mining situations, they have a variety of use cases that make them viable. You want to use a decision tree whenever you want to see what the potential outcomes are with data points.

Common decision tree examples are, for example, loan officers who use it for their decision making process. They will use a decision tree maker to build out a set of decision rules based on the client. As each choice is made with the decision nodes, showing the risk of the client. Eventually, it will help to determine the outcome of whether to give the loan or not.

A decision tree can also be used for personal decisions. Those who want to practice their decision making techniques can do this often for themselves. Decision tree analysis helps with which job to take (one decision tree will show you the pros and cons). It can show the outcome of a specific set of circumstances and help you determine and make real life decisions. Decision trees can help you even to set new habits by visually representing each branch as a logical order of events, for example, to change your eating habits.

Sometimes a decision tree is to hit some type of target variable or expected figure. You may be building out the path to get to that expected figure.

Another use of decision trees can be in education. You can use it in school for different courses, to explain a school subject, or use it as a classification tree.

Which algorithm is best for decision trees?

There are several options for which algorithm or method to use with your decision tree. Yet when you are starting out, you want to start out with the simplest method first. That’s where ID3 or Iterative Dichotomiser 3 comes in, which offers you to maximize your data points. It can also help with reaching an expected value through the decision making process.

It gets that name because it continuously splits the decision nodes to have two or more decision nodes. This is, of course, until you reach the leaf nodes. It’s the easiest for data preparation, and the one most people commonly see. Out of all the machine learning algorithms, this will minimize risk or chaos. At the same time, it will offer the clearest potential outcomes with all the data points there. You may hear of other techniques, such as the CART algorithm. That’s for another time. Decision trees tend to easily get more complex and make it harder to get the expected value. You also don’t need to hear about regression trees which is a completely different tree type.

While there are more refined machine learning algorithms, ID3 will be the best for those learning decision making techniques. You will easily start to make the optimal decision tree in no time.

Where to start with building a decision tree model and multiple trees?

You can consider using Mindomo as a tree diagram maker to make a decision tree. Your decision tree will have the root node to the leaf node as you need it. Once you make the first decision tree, you can easily repurpose it for multiple trees. Just remember to start the root node at the top and prepare for many possible outcomes.

Keep it smart, simple, and creative!

The Mindomo Team