We are going to get more technical with our decision tree, internal nodes, leaf nodes, and the like. It also has a predetermined target variable perfect with categorical data, which is a type of supervised learning. Supervised learning is what we want to achieve with our decision tree examples. We will also get more complex and build out regression trees or regression models

A typical decision tree already has the fundamentals of regression models in them. That makes most of the decision trees you build regression trees. Your decision tree algorithms are what machine learning algorithms are currently doing, just faster and with more data.

If you are familiar with mind map examples, then building decision trees shouldn’t be an issue. Take mind map examples and give them a tree like structure, and you’re well on your way.

Quick note: what is a target variable?

Remember that this variable is one that derives its values and probabilities from other variables. It’s similar to being the dependent variable and important to understand regression. This helps to train and test sets, and that training data helps to bring the expected value. All of this is the predecessor to machine learning, and it all starts with decision trees.

Simple decision tree structure and elements

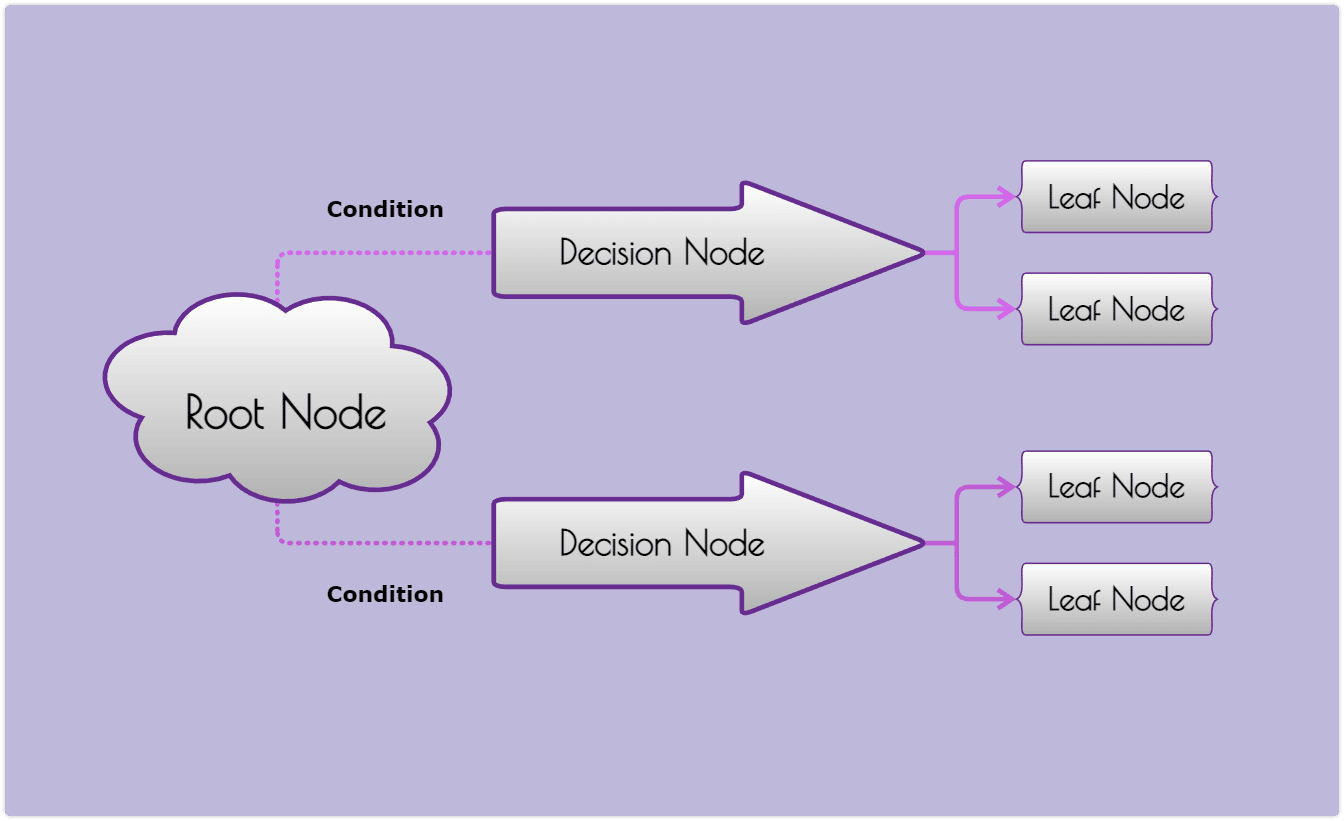

Our first example will show leaf nodes, the root note, where a default value goes, as well as data points. It will be missing values or a data set but will start to show you what the decision-making process can look like. Keep in mind the below is just a template decision tree and is meant to show simple decision rules.

Let’s take a moment to look at this image above and fully understand it. The root node is where we start and is known as the default value. The leaf nodes are expected outcomes based on the root node and the path taken. The root node can be whatever you need it to be. The leaf node has an expected value or outcome.

Another term for leaf nodes is terminal nodes. Conditions cannot have the same values or missing values. Otherwise, it cannot be a choice. If there are missing values, you need to correct the decision tree. Use the above as an example of learning methods on how to build out

When you even look at something as simplistic as the above, it’s still the basis for machine learning algorithms. That’s because of the supervised learning that comes from making a decision tree. This example doesn’t have continuous variables yet, which are important for regression models. It’s meant to be your first visual representation of how a decision tree could look like, not the entire diagram.

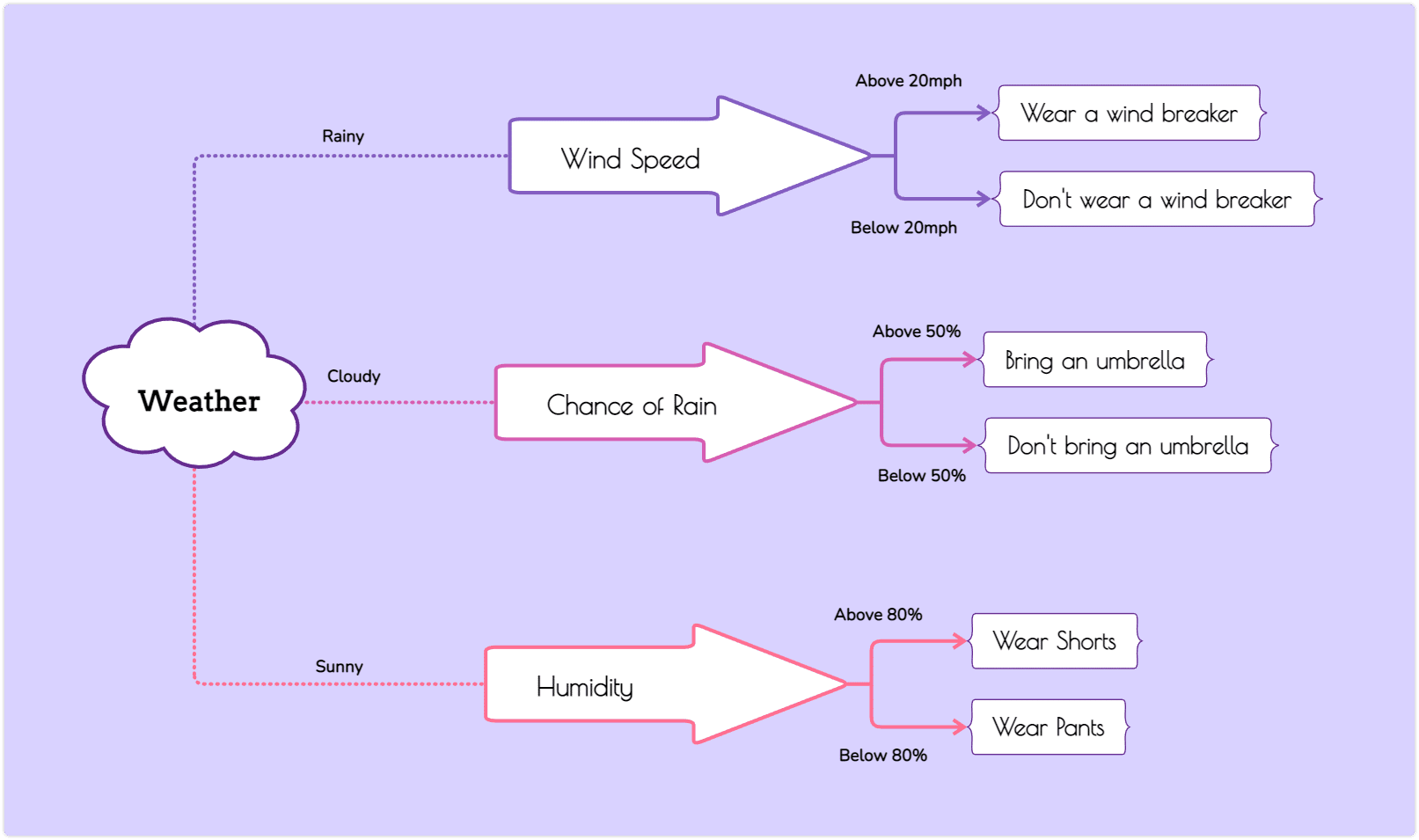

1. Weather Decision Tree Example

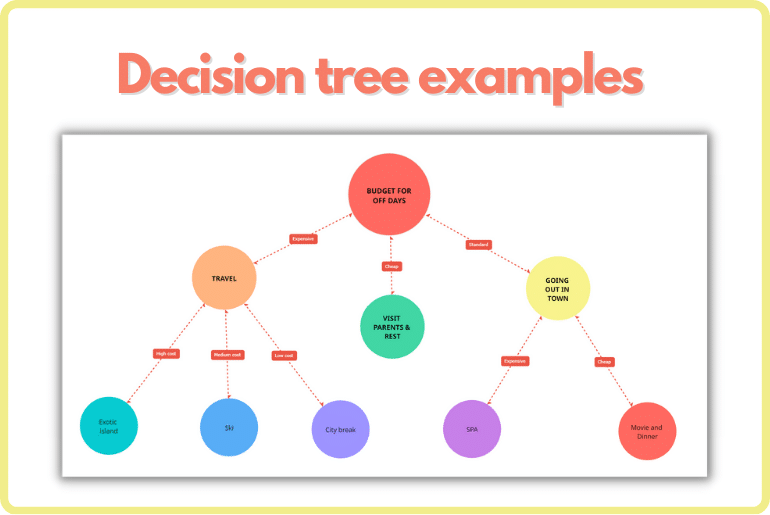

Every decision tree you make going forward will have some type of structure like this. You don’t need to branch out to only two options, and in the next example, we’re going to take a deeper look at how decision trees tend to work.

Here we have a personal decision tree. Decision trees can often be of a personal nature and still help with decision-making processes. It helps to show possible consequences without it being too serious. The above example is technically a regression tree as it has continuous variables. The first node is a generic topic, while each branch has data to consider.

The data also appears in the leaf node, and it’s more of a classification problem. It shows what the minimum number or percentage should be at each stage of the tree. It also helps to show the different courses possible. This example is a discrete set as well as we’ve pre-determined the variables. Keep in mind since there are probabilities, there is the possibility of adding a chance event to this decision tree. Yet the example above doesn’t show that. It does, however, show clothing options as the outcome and clear decision branches.

The intention of all of this is to help you with building out decision trees that continue to get more complex.

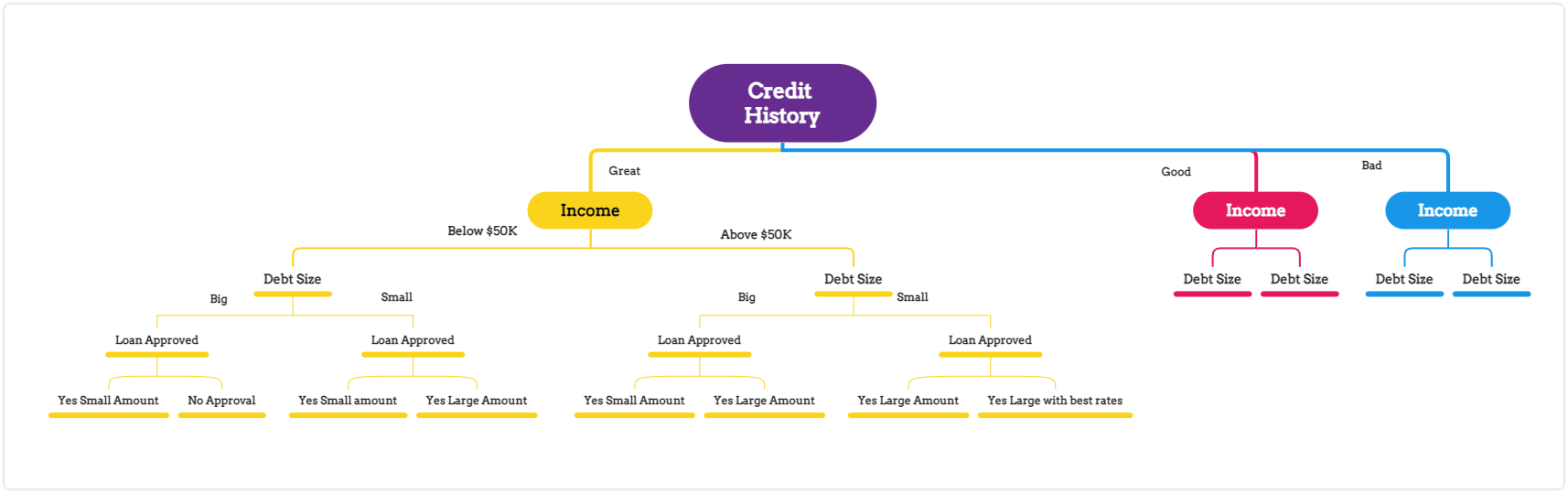

2. Credit History Decision Tree

We’ll look at the most complex decision tree example below, and it commonly has used in business decisions. The decision tree below is an example of a standard credit review that financial institutions do.

All the variables are continuous until you get to the terminal node or leaf node. The leaf nodes, in this case, can be the same, depending on the data points selected. The data set itself is fixed, and this decision tree can be repurposed numerous times. The outcomes can be measured against the expected values, and you’ve built a manual machine learning system.

This above decision tree is only an example of one main branch and outcome. The Good and Bad credit scores would yield similar trees with different categorical data points. Once fully built out, you can work with the same default value from the top and work your way down with a new client. This is the most common of the decision tree algorithms. It’s meant to avoid the greedy algorithm, which is used to get the best possible outcome every time.

This type of decision tree is not for that. It’s all about using categorical data and making a particular decision every time. You can update the internal nodes as necessary. Maybe repurpose this decision tree to different loan types and different data features. Yet the process of supervised learning is still the same.

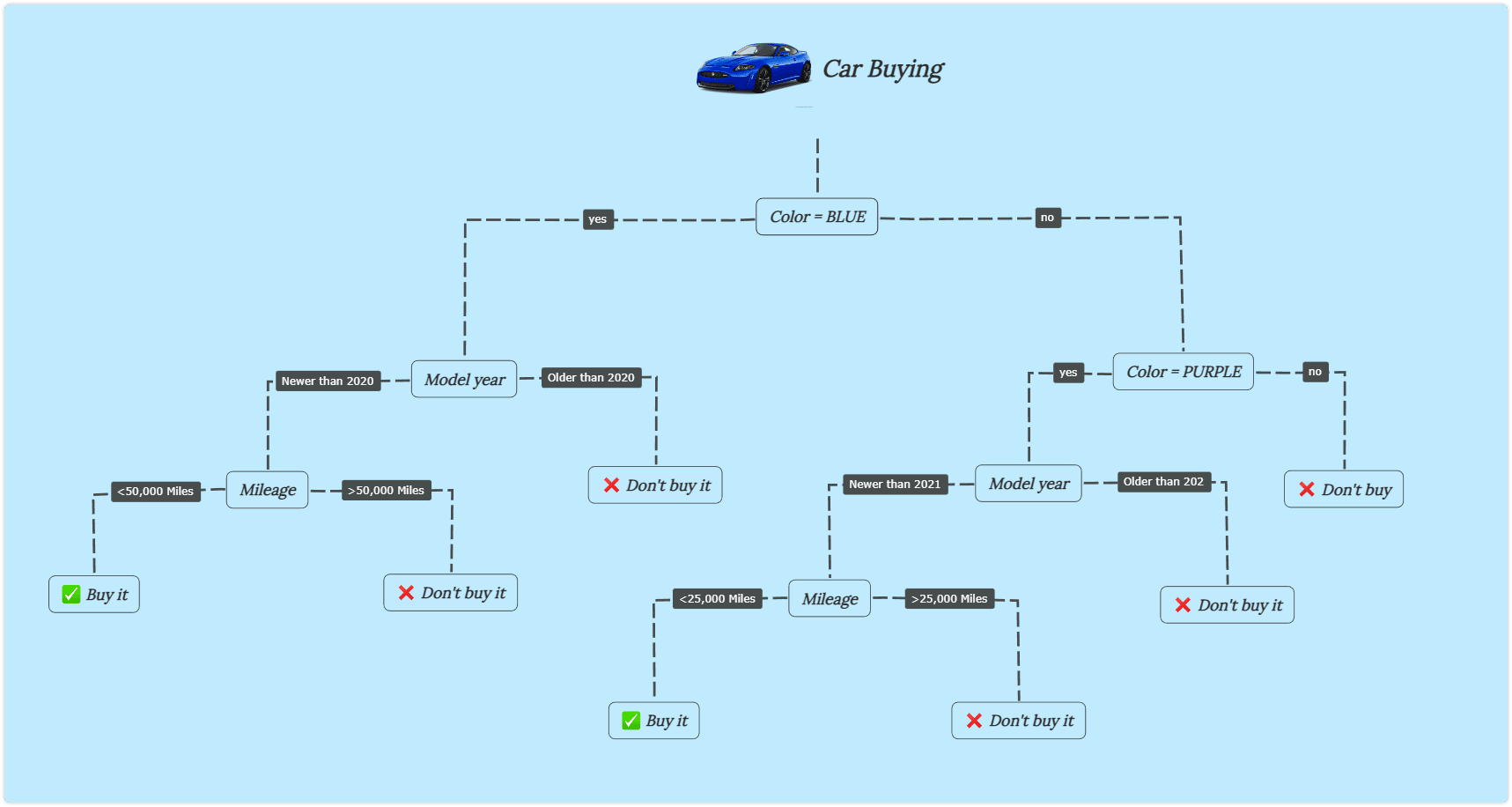

3. Decisipon Tree for Buying a Car

You can also look at the example below for a more personal option, such as buying a car. Starting with the color, you can see how it can break down, and the decision tree can have changing variables. Whereas the blue car could have more miles, one can opt for the purple car with fewer miles. This is an excellent example of personal use for decision trees.

Conclusions

A decision tree or making multiple decision trees requires practice and focusing on your decision making techniques. Be prepared when you build out a decision tree to also have a way to collect the data set. The data set is imperative for the machine learning processes, and it all comes down to understanding the examples above.

Mindomo is a decision tree maker that has plenty of options to start practicing, and you can pair it with other tools for machine learning. Either way working with decision trees will only help to improve your decision making process and build training data.

Feel free to sign up on Mindomo and build your first decision tree examples and delve into the world of machine learning. You won’t even need processing power for machine learning or your decision trees. Just remember you always start at the beginning with that root node in your decision trees.

Keep it smart, simple, and creative!

The Mindomo Team